In a groundbreaking development, DeepSeek has revolutionized artificial intelligence by achieving OpenAI o1-level reasoning without the need for a PhD! Through a unique blend of pure Reinforcement Learning (RL) and an innovative multi-stage training process, DeepSeek has successfully tackled longstanding challenges in AI training. Discover how this ambitious approach is setting new standards in the field and making advanced AI more accessible than ever before!

Explained

DeepSeek just made a breakthrough: you can train a model to match OpenAI o1-level reasoning using pure reinforcement learning (RL) without using labeled data (DeepSeek-R1-Zero). But RL alone isn’t perfect — it can lead to challenges like poor readability. A mix of methods in a multi-stage training fixes these (DeepSeek-R1).

The launch of GPT-4 forever changed the AI industry. But today, it feels like an iPhone 4 compared to the next wave of reasoning models (e.g. OpenAI o1).

These “reasoning models” introduce a chain-of-thought (CoT) thinking phase before generating an answer at inference time, which in turn improves their reasoning performance.

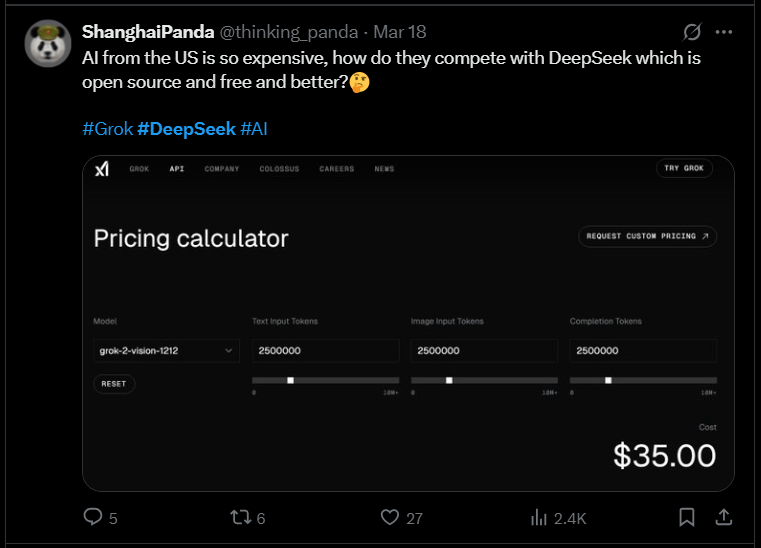

While OpenAI kept their methods under wraps, DeepSeek is taking the opposite approach — sharing their progress openly and earning praise for staying true to the open-source mission. Or as Marc said it best:

This open-source reasoning model is as good as OpenAI’s o1 in tasks like math, coding, and logical reasoning, which is a huge win for the open-source community… and the world (Marc, your words not ours!)

As someone who spends a lot of time working with LLMs and guiding others on how to use them, I decided to take a closer look at the DeepSeek-R1 training process. Using their paper as my guide, I pieced it all together and broke it down into something anyone can follow—no AI PhD required. Hopefully you’ll find it useful!

Now, let’s start with the fundamentals.

A quick primer

To better understand the backbone of DeepSeek-R1, let’s cover the basics:

Reinforcement Learning (RL): A model learns by receiving rewards or penalties based on its actions, improving through trial and error. In the context of LLMs, this can involve traditional RL methods like policy optimization (e.g., Proximal Policy Optimization, PPO), value-based approaches (e.g., Q-learning), or hybrid strategies (e.g., actor-critic methods). Example: When training on a prompt like “2 + 2 =”, the model receives a reward of +1 for outputting “4” and a penalty of -1 for any other answer. In modern LLMs, rewards are often determined by human-labeled feedback (RLHF) or as we’ll soon learn, with automated scoring methods like GRPO.

Supervised fine-tuning (SFT): A base model is re-trained using labeled data to perform better on a specific task. Example: Fine-tune an LLM using a labeled dataset of customer support questions and answers to make it more accurate in handling common queries. Great to use if you have an abundance of labeled data.

Cold start data: A minimally labeled dataset used to help the model get a general understanding of the task.* Example: Fine-tune a chatbot with a simple dataset of FAQ pairs scraped from a website to establish a foundational understanding. Useful when you don’t have a lot of labeled data.

Multi-stage training: A model is trained in phases, each focusing on a specific improvement, such as accuracy or alignment. Example: Train a model on general text data, then refine it with reinforcement learning on user feedback to improve its conversational abilities.

Rejection sampling: A method where a model generates multiple potential outputs, but only the ones that meet specific criteria, such as quality or relevance, are selected for further use. Example: After a RL process, a model generates several responses, but only keeps those that are useful for retraining the model.

First model: DeepSeek-R1-Zero

The team at DeepSeek wanted to prove whether it’s possible to train a powerful reasoning model using pure-reinforcement learning (RL). This form of “pure” reinforcement learning works without labeled data.

Skipping labeled data? Seems like a bold move for RL in the world of LLMs.

I’ve learned that pure-RL is slower upfront (trial and error takes time) — but iteliminates the costly, time-intensive labeling bottleneck. In the long run, it’ll be faster, scalable, and way more efficient for building reasoning models. Mostly, because they learn on their own.

DeepSeek did a successful run of a pure-RL training — matching OpenAI o1’s performance.

Calling this a ‘huge accomplishment” feels like an understatement—it’s the first time anyone’s made this work. Then again, maybe OpenAI did it first with o1, but we’ll never know, will we?

The biggest question on my mind was: ‘How did they make it work?’

Let’s cover what I found out.

Using the GRPO RL framework

Traditionally, RL for training LLMs has been most successful when combined with labeled data (e.g the PPO RL Framework). This RL approach employs a critic model that’s like an “LLM coach”, giving feedback on each move to help the model improve. It evaluates the LLM’s actions against labeled data, evaluating how likely the model is to succeed (value function) and guiding the model’s overall strategy.

The challenge?

This approach is limited by the labeled data it uses to evaluate decisions. If the labeled data is incomplete, biased, or doesn’t cover the full range of tasks, the critic can only provide feedback within those constraints — and it won’t generalize well.

Enter, GRPO!

The authors used the Group Relative Policy Optimization (GRPO) RL framework (invented by the same team, wild!) which eliminates the critic model.

With GRPO, you skip the ‘coach’—and the LLM moves are scored over multiple rounds by using predefined rules like coherence and/or fluency. These models learn by comparing these scores to the group’s average.

But wait, how did they know if these rules are the right rules?

In this method, the rules aren’t perfect—they’re just a best guess at what “good” looks like. These rules are designed to catch patterns that usually make sense, like:

- Does the answer make sense? (Coherence)

- Is it in the right format? (Completeness)

- Does it match the general style we expect? (Fluency)

For example, for the DeepSeek-R1-Zero model, for mathematical tasks, the model could be rewarded for producing outputs that adhered to mathematical principles or logical consistency, even without knowing the exact answer.

It makes sense.. and it works!

The DeepSeek-R1-Zero model had great performance on reasoning benchmarks. Plus it had a 86.7% of pass@1 score on AIME 2024 (a prestigious mathematics competition for high school students), matching the performance of OpenAI-o1-0912. While this seems like the biggest breakthrough from this paper, the R1-Zero model didn’t come with a few challenges: poor readability, and language mixing.

Second model: DeepSeek-R1

Poor readability and language mixing is something you’d expect from using pure-RL, without the structure or formatting provided by labeled data.

Breakthroughs in HIV Treatment

Popular Topics

Now, with this paper, we can see that multi-stage training can mitigate these challenges. In the case of training the DeepSeek-R1 model, a lot of training methods were used:

Here’s a quick explanation of each training stage and what it was done:

Step 1: They fine-tuned a base model (DeepSeek-V3-Base) using thousands of cold-start data points, which is a tiny fraction compared to the millions or even billions of labeled data points needed for large-scale supervised learning.

Step 2: Applied pure RL (like R1-Zero) to improve reasoning skills.

Step 3: Near RL convergence, they utilized rejection sampling, allowing the model to generate its own labeled synthetic data by selecting the best examples from the recent successful RL run.

Step 4: Merged the new synthetic data with supervised data from DeepSeek-V3-Base in areas such as writing and factual QA, enabling the model to learn from high-quality outputs and diverse domain-specific knowledge.

Step 5: Following fine-tuning with the new data, the model undergoes a final RL process across varied prompts and scenarios.

This multi-stage process feels like hacking — so why does DeepSeek-R1 utilize it?

Because each step builds on the last, enhancing foundation, reasoning, data quality, and overall generalization.

These steps lead to the DeepSeek-R1 model achieving high scores across all benchmarks below.

.png)

CoT at inference time relies on RL

To effectively use chain-of-thought reasoning at inference, models need reinforcement learning training that promotes step-by-step reasoning. It’s crucial for achieving high-level reasoning. The question arises: why did OpenAI keep their training methods secret, given the seemingly straightforward multi-stage process of the o1 model?

They clearly employed RL, synthesized data from RL checkpoints, and used supervised training for clarity. What was the strategic advantage in delaying competition (R1) by just a couple of months?

I guess time will tell.

How to use DeepSeek-R1

To use DeepSeek-R1, you can test it on their free platform or acquire an API key for integration with AI development platforms like Vellum or Fireworks AI.

The hosted model costs $0.55 per million input tokens and $2.19 per million output tokens, making it about 27 times cheaper for inputs and nearly 27.4 times cheaper for outputs compared to OpenAI’s o1 model.

This API version supports a maximum context length of 64K but lacks function calling and JSON outputs. It allows retrieval of both the “reasoning” and the final answer, albeit slowly, which is acceptable for reasoning models as speed isn’t the priority.

Additionally, it does not support several other parameters like temperature, top_p, presence_penalty, frequency_penalty, logprobs, and top_logprobs, which complicates production use.

Conclusion

DeepSeek has demonstrated that significant improvements in LLM reasoning can be achieved purely through reinforcement learning (RL), without the need for labeled data. Their post-training techniques enhance performance even further.

Expect a surge of models like R1 and O1 soon. While it seemed model scaling had plateaued, this method is reopening doors for faster advancements. OpenAI took 6 months from GPT-3.5 to GPT-4, but DeepSeek achieved O1-level performance in just 2 months without prior knowledge of OpenAI’s methods.

Get ready for a new wave of models that will put O1 to shame.

Source: Materials provided by Anita Kirkovska on Vellum.AI